DeepSeek is a new AI tool from China that has caused big waves worldwide. Although it was first mentioned as an alternative to ChatGPT, the company has produced various other models that can be deployed and tested on a personal PC.

They can be used for text summarization, research, code generation and debugging, creating to-do lists, and more.

This can be an excellent tool for boosting personal productivity, building a business solution, or programming a new software tool.

In this article, you will learn how to run DeepSeek on a Mac, what tools to use, and what tasks to perform. You will also be presented with an overall Mac M4 DeepSeek performance.

Let’s get started!

What you’ll need to run DeepSeek on Mac

If you want to get the best out of the tasks for your model, you should have several things:

- A modern computer: the best case is when you use Mac M4, although other versions are also suitable for doing machine learning on a Mac

- 16 GB of RAM: You may also work with 8 GB, but select lighter models. The better the memory, the faster your tasks will run

- 50 GB of free space

If you have all this, nothing can stop you from starting.

How to run DeepSeek on a Mac?

To run DeepSeek on Mac, you need a tool for working with LLMs created for macOS. The best option for running LLMs on a Mac is LM Studio. As you choose between them, follow these steps:

- Download LM Studio

- Proceed to set up

- Choose the model from the list

- Deploy the model

- Run the model

Now, we’ll review these steps one by one

Download and set up your tool

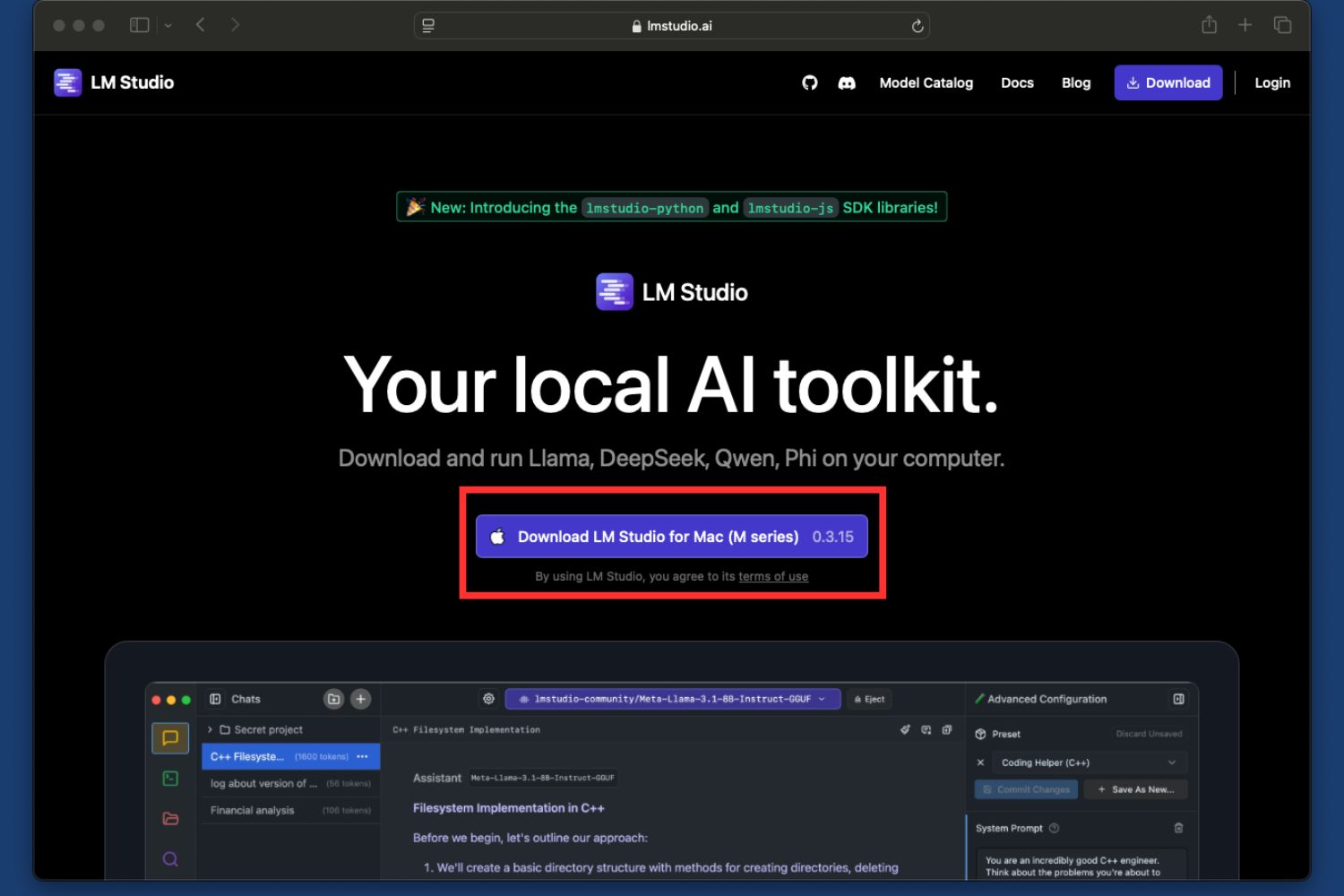

LM Studio is the best tool for running DeepSeek on Mac. It has a simple chat interface and allows you to run models using prompts.

You can download LM Studio from the official site and install it in a few simple steps.

Step 1: Go to the LM Studio website and download your app:

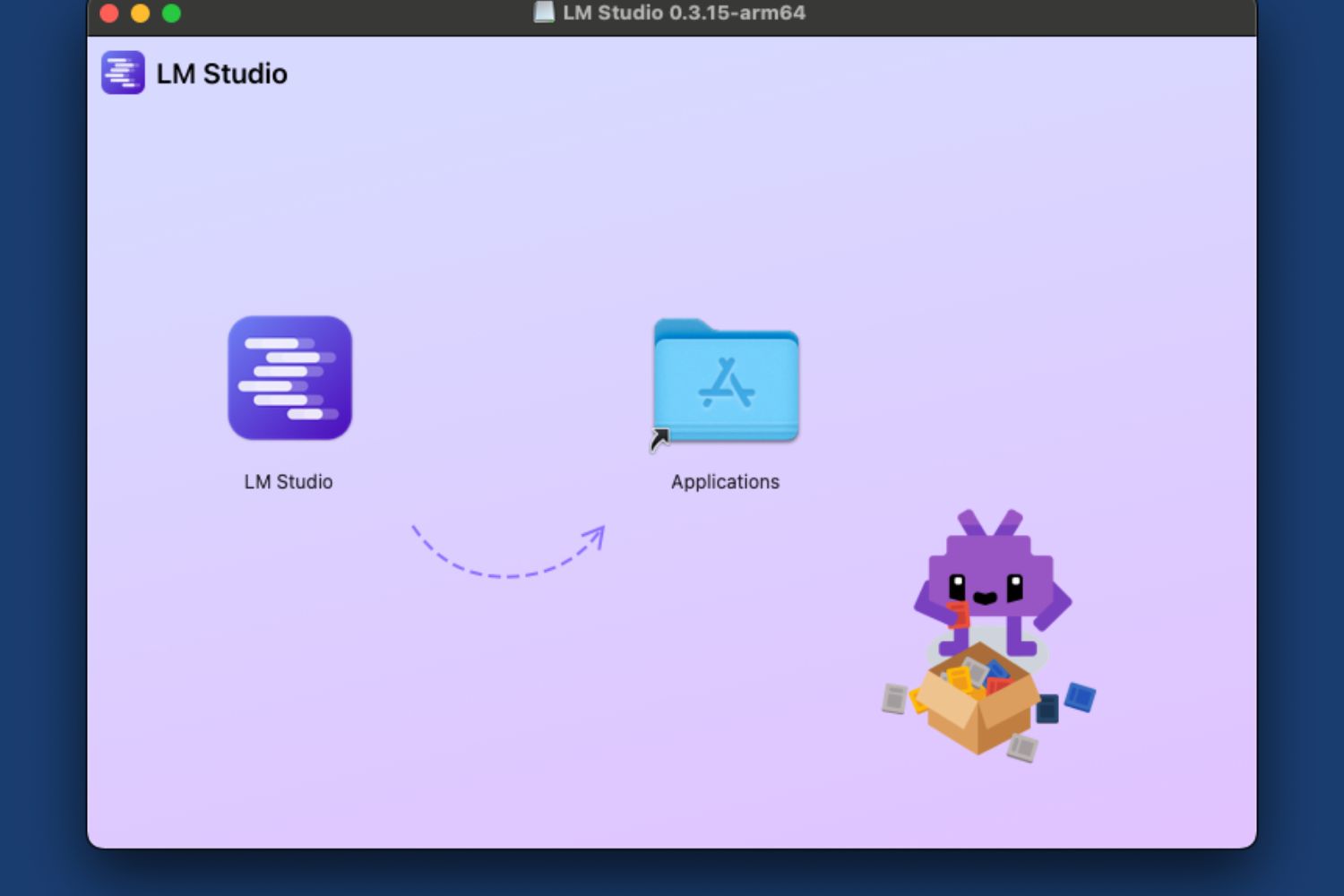

Step 2: As you open the downloaded file on your Mac, the system will ask you to move the file to the Applications folder:

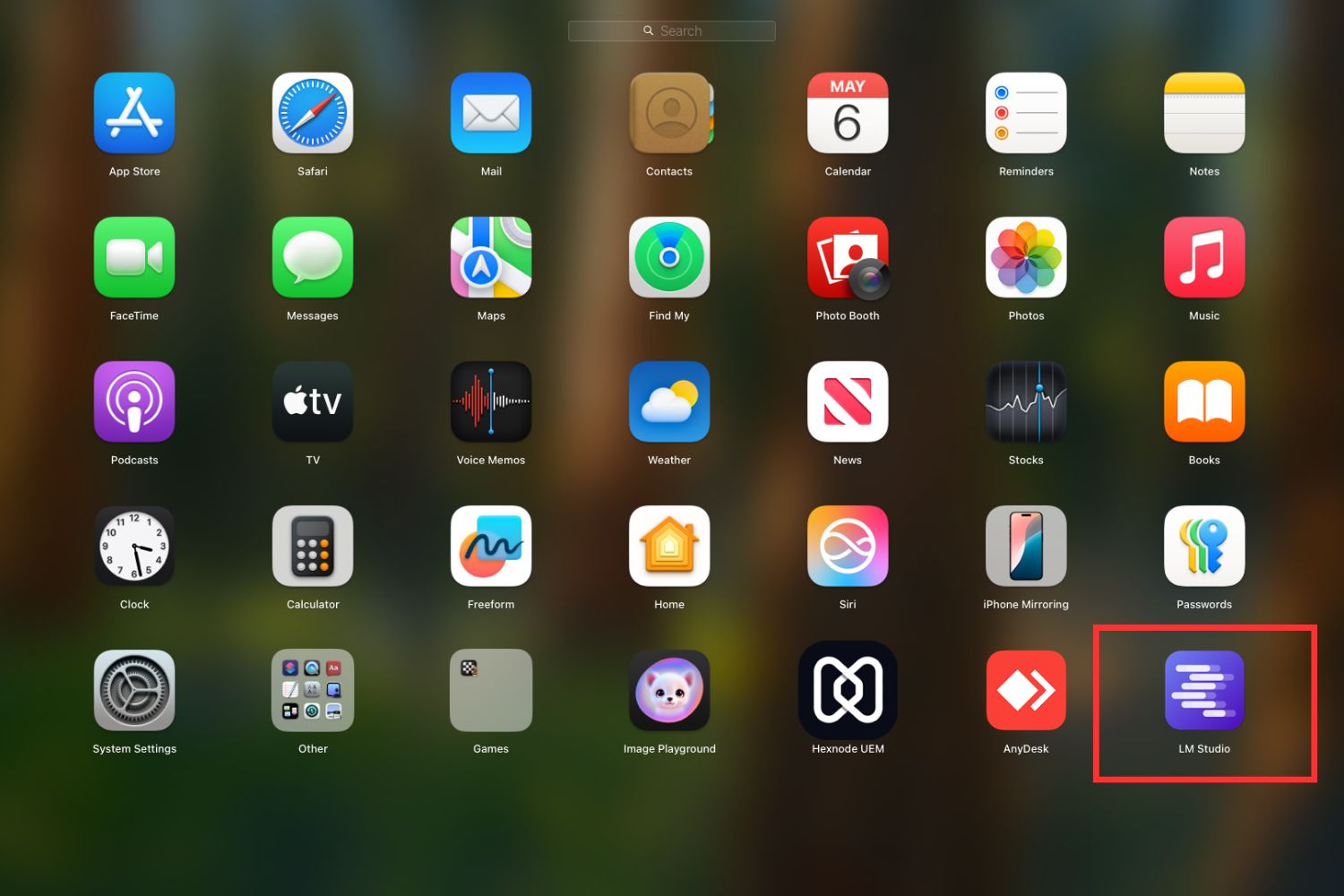

Step 3: Find LM studio in the Applications folder and open it from there:

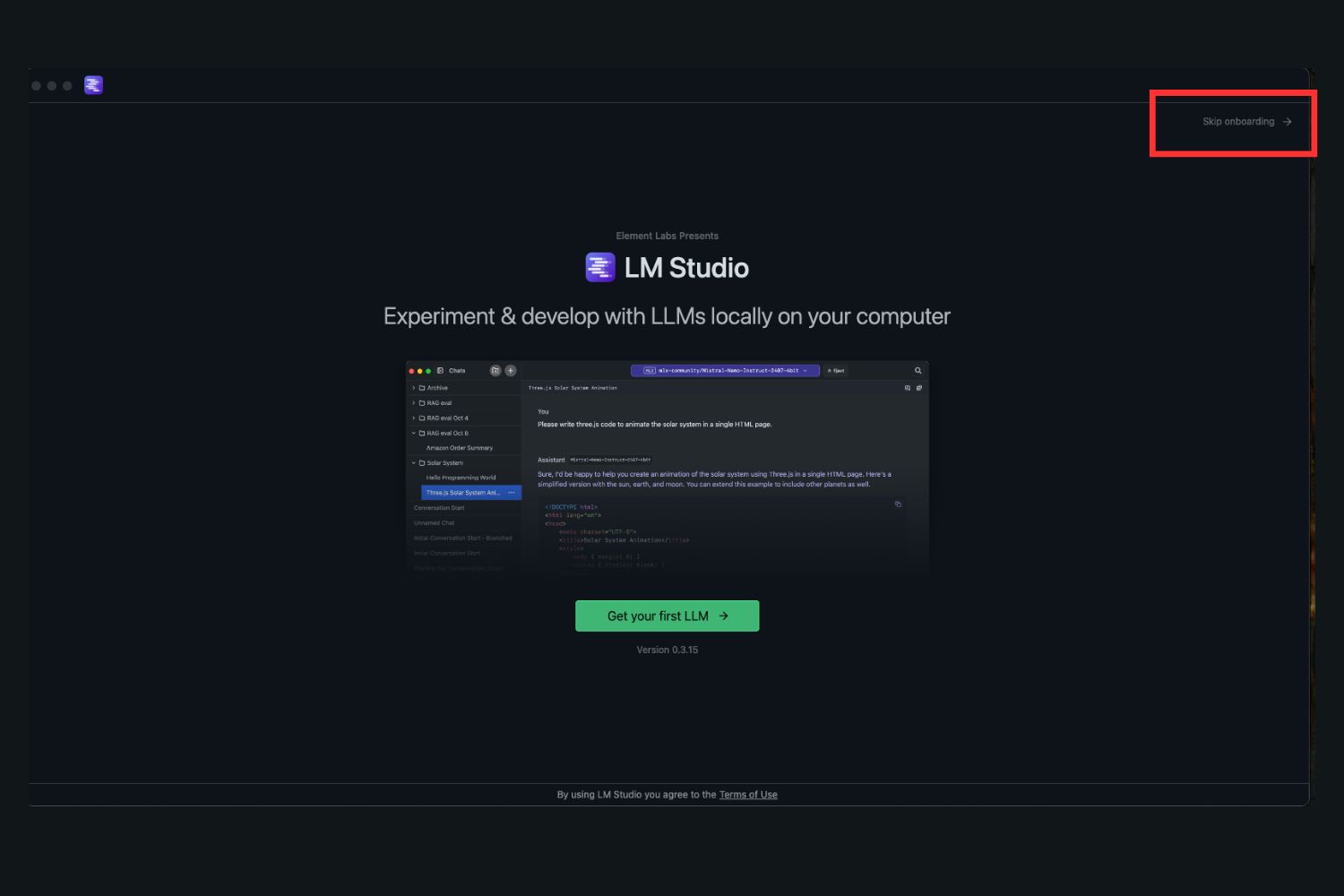

Step 4. You are welcomed with the onboarding screen, where you are offered to download a model for a Welcome tutorial. You can skip this part by clicking on Skip onboarding.

Now that the process is finished, you can continue running your model.

Choose a model from the list

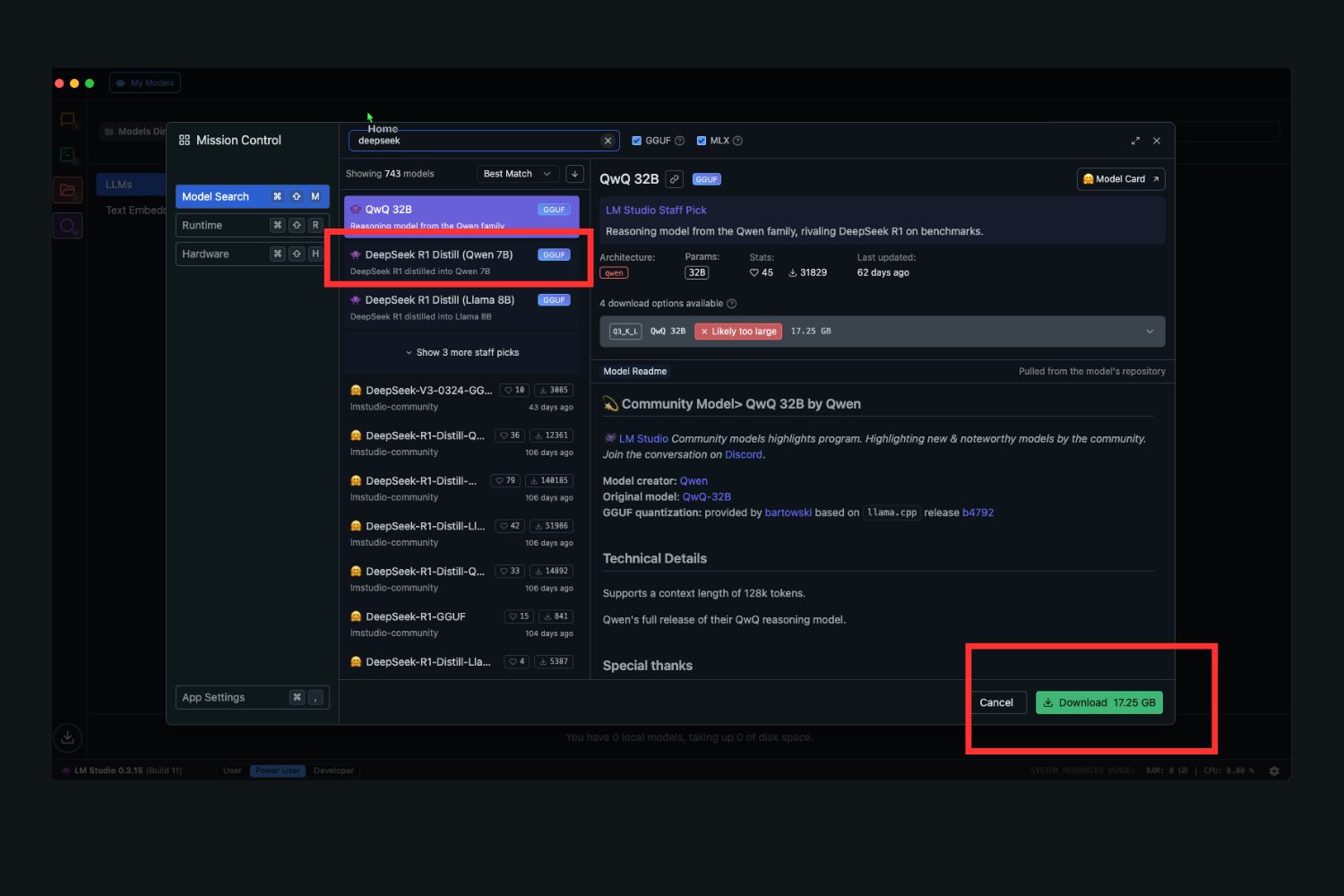

LM Studio is integrated with HuggingFace so you can access over 1 million models, including over 9K options from DeepSeek.

Remember that different models have different weights, or the computing power and memory volume needed to run them.

The more parameters a model has, the heavier it is. For example, the full-scale DeepSeek model (the one that competes with ChatGPT) used 671 billion (-671B) parameters and can be run on data centers with hundreds of GBs of RAM.

The model weights (or the number of parameters it operates on) are often displayed in its name. The model names under the DeepSeek umbrella have the -7B, -8B, and -32B markings.

Depending on your RAM, you can select either lighter or heavier models. The RAM factor is crucial here, as the model will have to fit into your GPU memory completely if you want it to run smoothly or at least at usable speeds.

You can select the 14B model with 16 GB of RAM, but if you are using the 8GB RAM versions of Macs, work with the 7B models and lighter ones.

If you connect to a cloud Mac with more memory, you can still run heavier models on an 8 GB Mac or another computer.

For example, at RentAMac.io, you can rent 16GB dedicated Macs on a time-share basis. The installation is quick, and the speed is high, so you have more freedom to experiment with different model weights.

So, here are the steps to select the model:

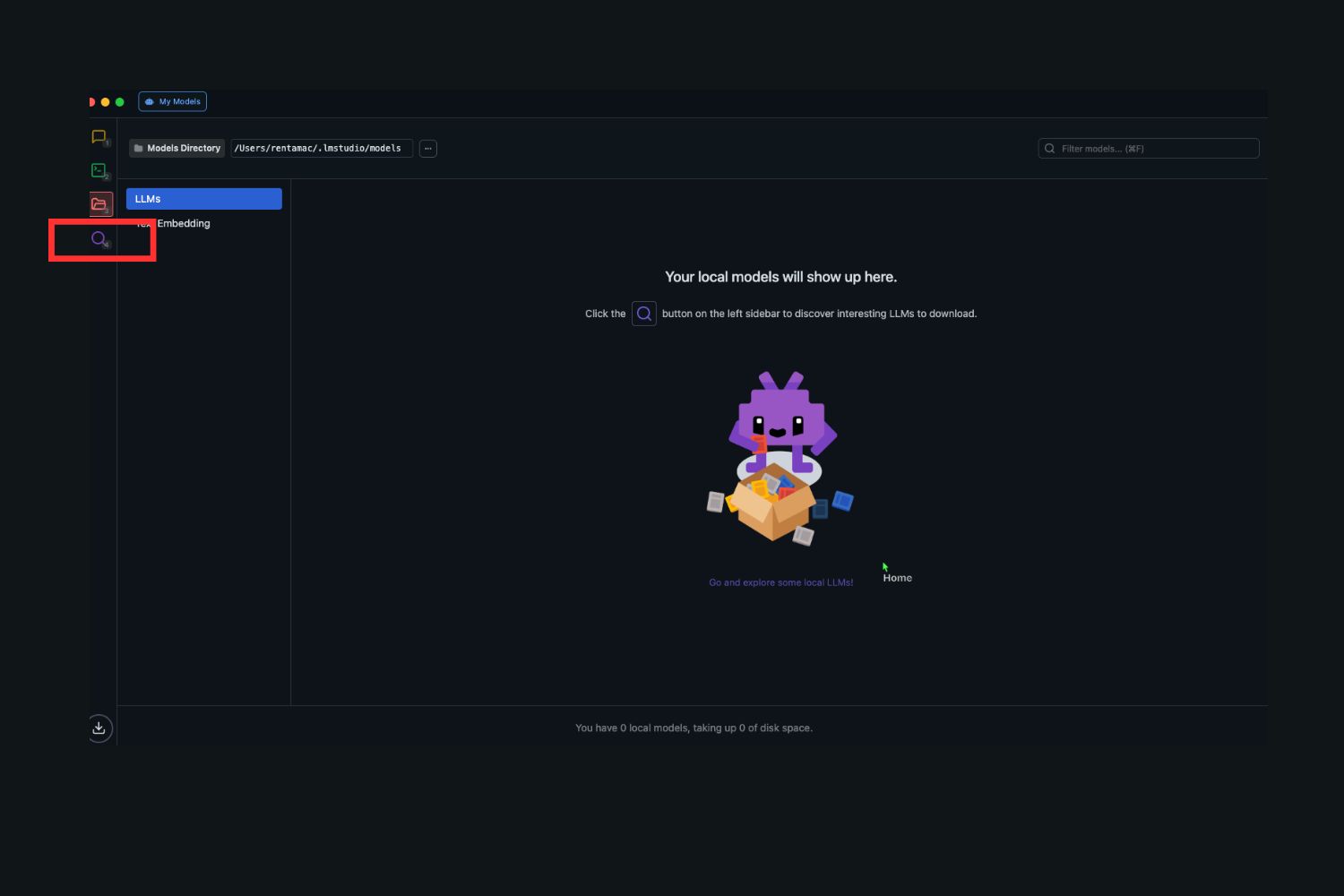

Step 1: Go to the Search button in the LM Studio menu.

Step 2: Go to the search bar and type the model name:

Step 3: Select the model with the weights you need and press the Download button:

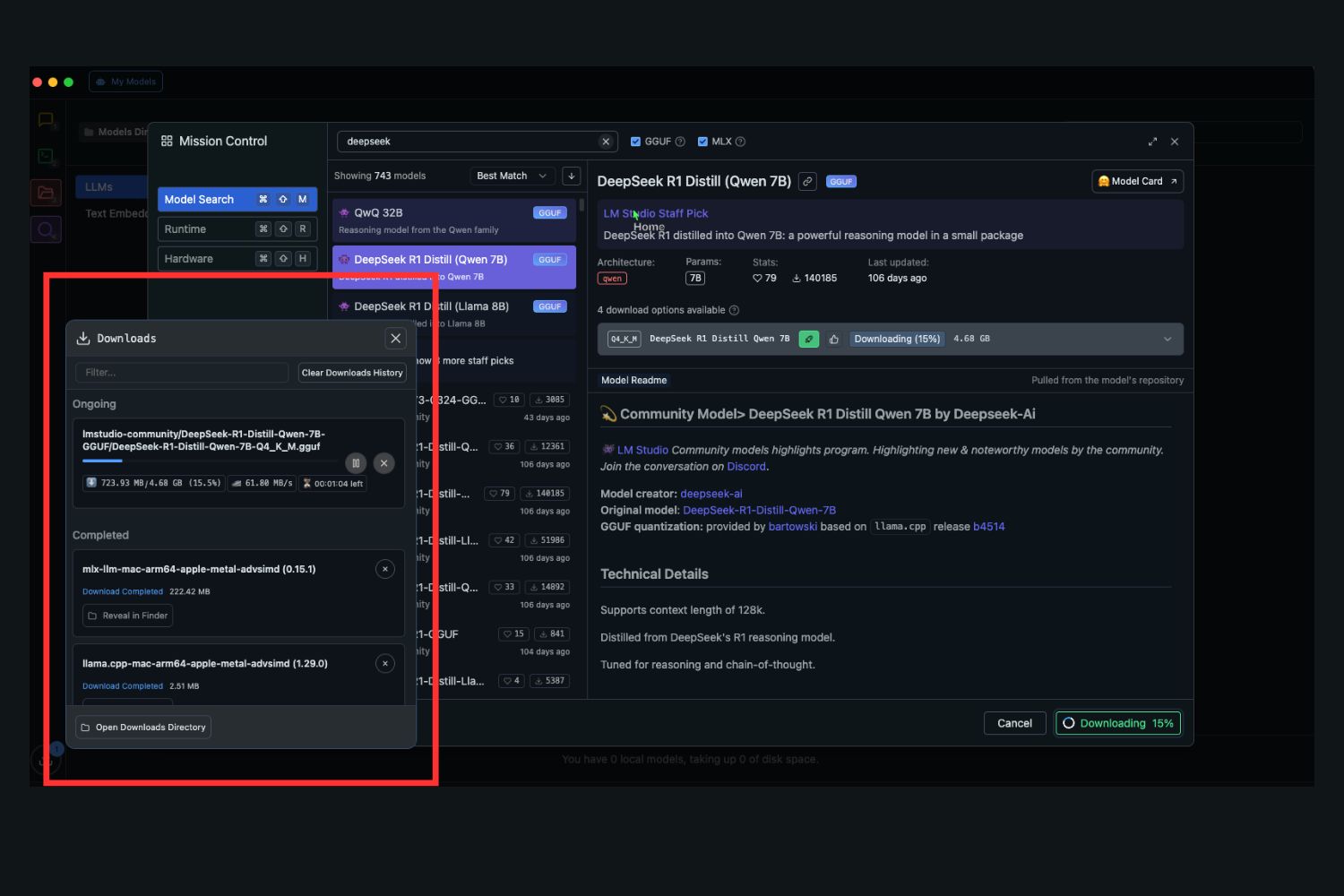

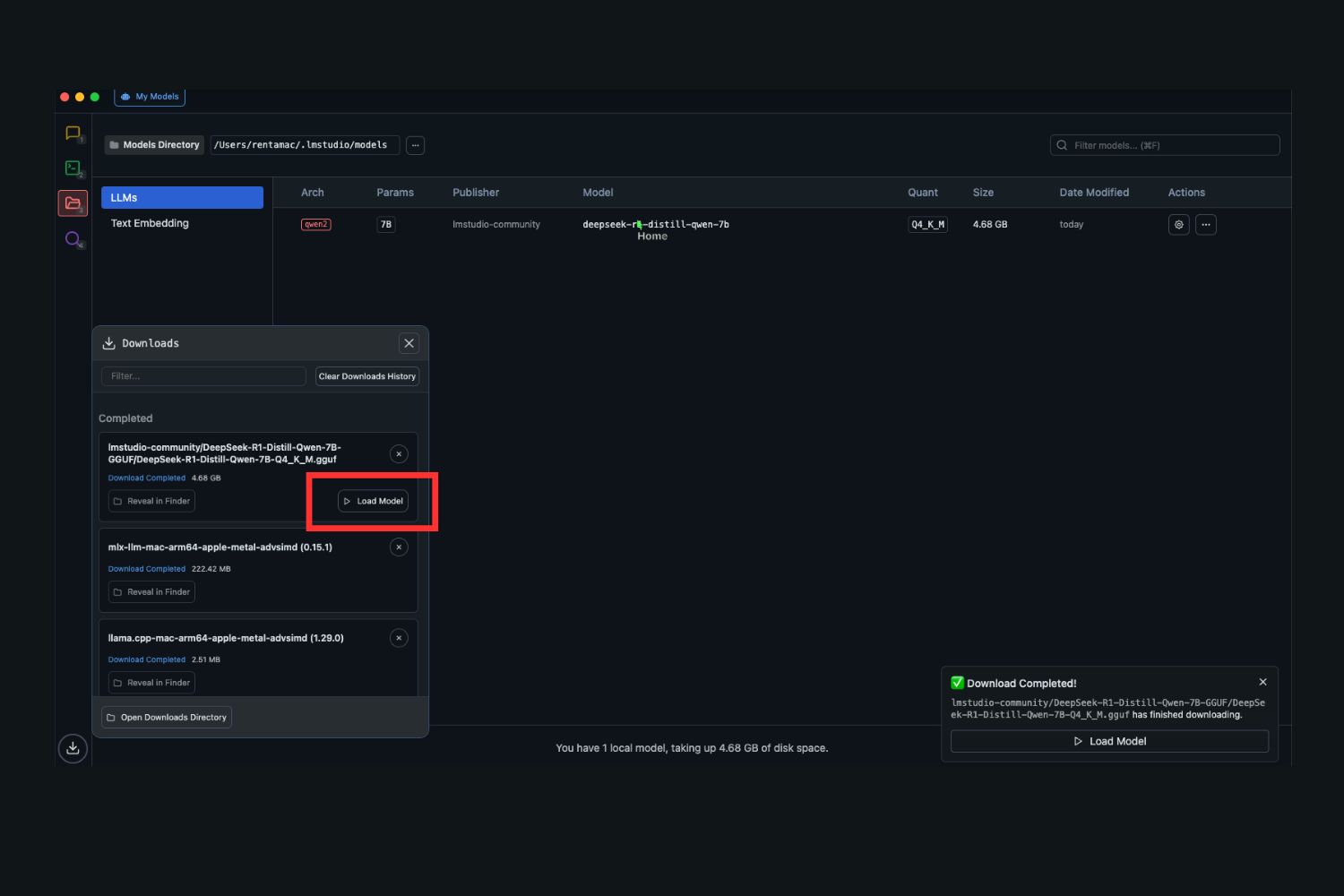

Download the model

After pressing the Download button, the downloading process starts. I can take up to a minute.

As the model is downloaded, you have to press the Load button to proceed further:

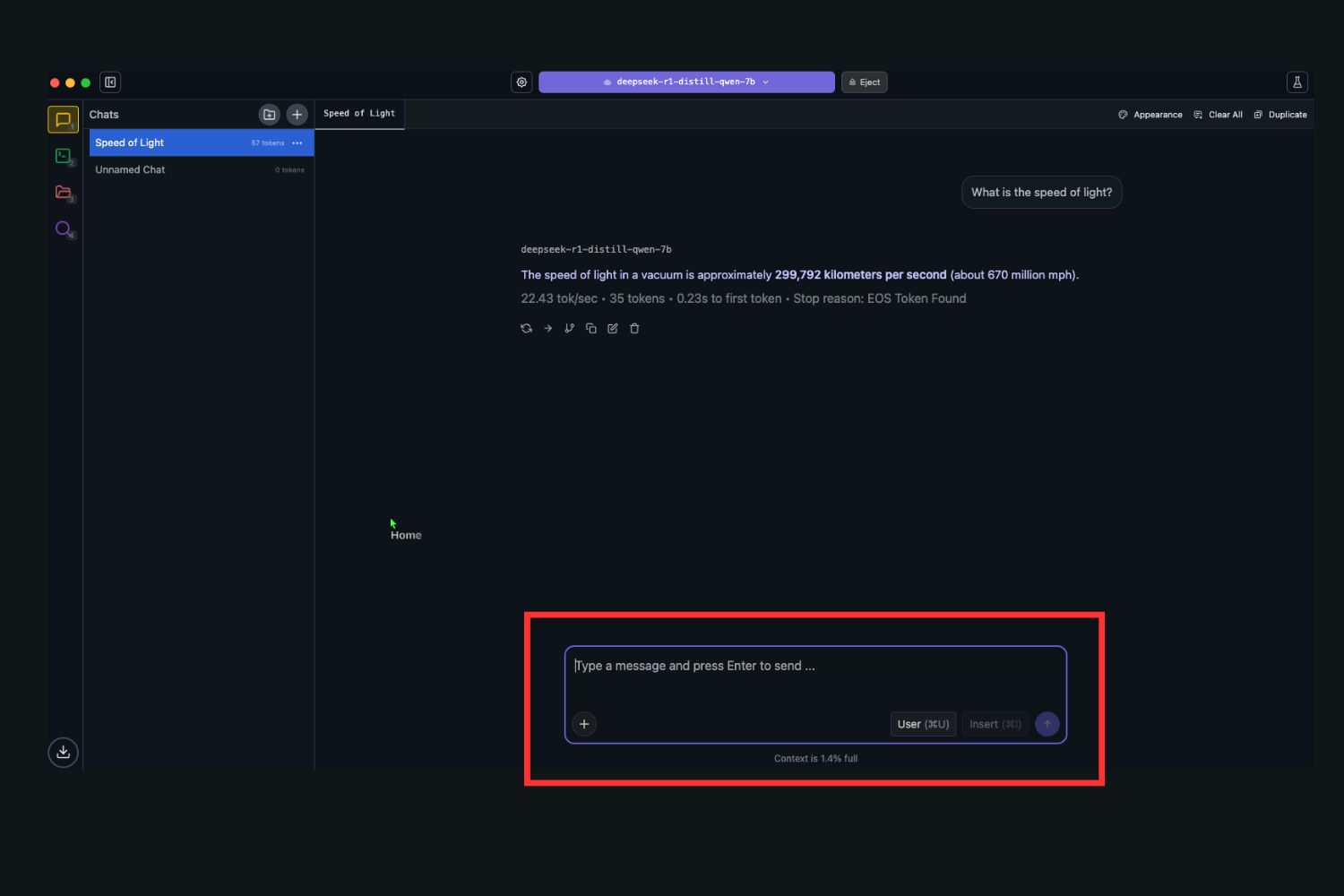

Run the model

After pressing the Load button, you are redirected to the chat interface, where you can give tasks to your model and get results:

You can do a wide range of tasks with DeepSeek models run on your Mac.

DeepSeek models are great for researching, brainstorming, document processing, code generation, and debugging.

Here are several examples of how you can use them in your work:

Software development: generate, test, and debug the code, get assistance in creating applications, and more

Writing and creative sphere: generate texts, brainstorm ideas, create blog topic lists, etc. You can even go beyond text. Many creators also run image-generation models like Stable Diffusion locally to extend their creative workflows.

Learning: explaining learning materials at different levels of complexity (e.g., explain quantum computing for five-graders)

Optimization: creating flexible work schedules for staff, planning activities, etc.

Mac M4 DeepSeek Performance

When discussing DeepSeek performance, the first thing to mention is that small 7B models are not as fast and capable as the commercial versions run from data centers. However, they can perform the abovementioned tasks, especially when deployed on powerful Mac devices. Mac M4 is the line champion due to its powerful M4 chip.

Mac M4 DeepSeek performance is better than that of computers with less powerful processors, so the model you run on M4 is less prone to generating nonsense or failing your tasks. Mac M4 also promises faster execution and smoother workflows.

Benefits of Running DeepSeek Locally on Mac

Since you can use the open-source online DeepSeek tool, why use local models? There are several reasons:

- Privacy: local models don’t send your data anywhere; all you type in and generate is stored exclusively on your computer

- Offline access: You can work in areas with bad connectivity, or during flights and long-haul trips

- No subscription fees: you get all the free privacy and flexibility

- Capability: they work very well with the scope of tasks described above

One of the side effects of the growing ML adoption rate will be the democratization of AI, or its top-down spread from large-scale solutions like DeepSeek or ChatGPT to custom solutions tuned for personal use. Running DeepSeek models on Mac is quite an example of this trend-to-come and a great way to develop future-proof ML skills.

Summing up

Running DeepSeek models locally on your computer is a great way to have a well-performing tool to assist you with different tasks always at hand. You don’t need to pay additional fees or have an internet connection to use them. Also, the performance of DeepSeek on Mac M4 proves to be great, making this Mac series a great option to democratize AI and open its possibilities to startuppers, small teams, and individuals wishing to explore AI possibilities privately.

Want to start working, learning, and experimenting with DeepSeek models immediately?

Rent a Mac to maximize DeepSeek performance and get the best results possible with our powerful M4 Mac minis.